背景

咱们 MoTouch 项目中的直播间内的视频区域是通过 flutter platform view + Thunder SDK 实现的,而在开发和测试过程中,iOS 侧频频出现莫名其妙的黑屏问题,而且是整个远端视图都黑了,分析日志后,发现: thunder 的远端流通知跟远端视图的首帧回调到来了,但视频区域还是黑的,怀疑是由于业务层调用接口不恰当引起的,这里记录一下问题的分析和解决。

分析原因

哪里黑屏了

既然 thunder 那边首帧回调都过来了,大概率是业务层这边的不恰当处理了···第一反应是这个这个 platform view 到底有没加上视图? frame 到底对不对?

等在 debug 复现黑屏情况后,在 xcode 看过视图层级,发现这个时候的 frame,size 都没问题,后来还是在 SDK 的同学帮忙排查下,才发现,是在调用 FlutterThunder.setRemoteVideoLayout 的时候,view 的 frame 是 0,导致 sdk 这个 video 的 frame 是 0 了,即使后来 view 的 frame 对了,但是没更新到 FlutterThunder 那边。

1 | Widget _remoteMixinWidget() { |

frame 为啥是 0 ?

翻翻 engine 的源码

1 | //FlutterPlatformViews.mm |

可见 platform view 初始化出来时,肯定是 CGRectZero 的。

但什么时候才是正确的呢?

1 | - (instancetype)initWithEmbeddedView:(UIView*)embeddedView |

我们都看得出来, FlutterPlatformView 实际上是被一个 FlutterTouchInterceptingView 包住的,其 frame 是跟随 FlutterTouchInterceptingView 大小

所以,我们顺藤摸瓜,看看 FlutterTouchInterceptingView 的 frame 到底在哪里改正确的。

1 | void FlutterPlatformViewsController::CompositeWithParams(int view_id, |

解决方案

addPostFrameCallback?

讲真,我们在 flutter 层其实对 native 的 frame 操作基本上是没有了,来看下我们都是怎么设置 platformView 的大小跟坐标的

1 | Widget remoteView() { |

容易看出来,通过对 platform view 的 父亲节点(Container 之类)设置坐标宽高,让其跟随父亲节点的大小。

那这个 platform view 的 宽高跟坐标在 flutter 层什么时候才确定呢?

google 一下, 建议是在这个每帧回调里面打印:

1 | WidgetsBinding.instance.addPostFrameCallback((_) { |

这个 WidgetsBinding 相当于连接 engine 跟 widget layer 的桥梁,而 postFrameCallBack 是在每一帧渲染后,回调执行的。

那这个 addPostFrameCallback 回调是否靠谱,我们得通过源码分析看看。

源码分析

要点复习

既然涉及 .mm 跟 dart 的交互,我们先来看看 c++ 跟 dart 层是如何交互的:

C ++(Engine) 与 dart (Framework) 交互

主要集中在这几个文件里面:window.dart, window.cc,hooks.dart

dart 调用 c++

window.cc1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28//注册 native 方法

void Window::RegisterNatives(tonic::DartLibraryNatives* natives) {

natives->Register({

{"Window_defaultRouteName", DefaultRouteName, 1, true},

{"Window_scheduleFrame", ScheduleFrame, 1, true},

{"Window_sendPlatformMessage", _SendPlatformMessage, 4, true},

{"Window_respondToPlatformMessage", _RespondToPlatformMessage, 3, true},

{"Window_render", Render, 2, true},

{"Window_updateSemantics", UpdateSemantics, 2, true},

{"Window_setIsolateDebugName", SetIsolateDebugName, 2, true},

{"Window_reportUnhandledException", ReportUnhandledException, 2, true},

{"Window_setNeedsReportTimings", SetNeedsReportTimings, 2, true},

{"Window_getPersistentIsolateData", GetPersistentIsolateData, 1, true},

});

}

···

void Render(Dart_NativeArguments args) {

Dart_Handle exception = nullptr;

Scene* scene =

tonic::DartConverter<Scene*>::FromArguments(args, 1, exception);

if (exception) {

Dart_ThrowException(exception);

return;

}

UIDartState::Current()->window()->client()->Render(scene);//这个 clinet() 实际是 engine

}

window.dart1

void render(Scene scene) native 'Window_render';//实际上是调用 window.cc 的native 方法

c++ 调用 dart

hooks.dart

1 | ('vm:entry-point') |

window.dart

1 | VoidCallback get onDrawFrame => _onDrawFrame; |

相关交互流程

整个渲染流程有点长,这里简要用白话总结下跟本文相关的几个交互步骤:

Engine 层监听 Vsync 信号,通过 _drawFrame 告诉 framework 层,快准备好数据给我(Flutter::layer tree)

Framework 层在 window.onBeginFrame , window.onDrawFrame 接收 Engine 的信息,把 widgets 的 UI 配置信息转化为 Layer, 最终产物是个 LayerTree ,通过 render() 发送个回 Engine

Engine 在 GPU 线程 处理 LayerTree, 主要通过 rasterizer 做栅格化操作( 将 LayerTree 转化为 SkCanvas)

略····

更详细的交互流程可以看这位大神的 blog: http://gityuan.com/2019/06/15/flutter_ui_draw/

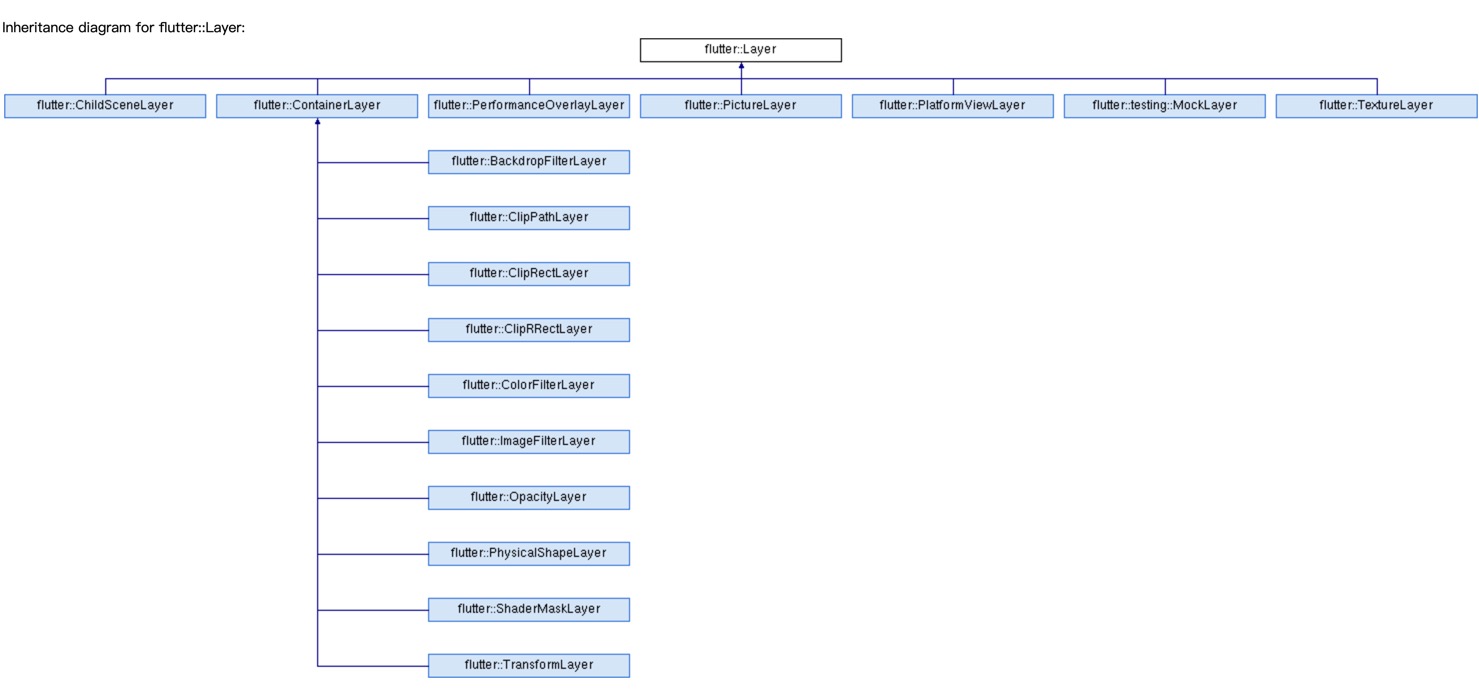

我们写界面实际上是不用接触到 Layer 的, 是 Framework 层做了转换,看下图,Container 对应的 flutter::ContainerLayer,PlatformView 对应 flutter::PlatformViewLayer, 他们都继承于 Flutter::layer。

https://engine.chinmaygarde.com/classflutter_1_1_layer.html

源码看到 PlatformViewLayer 这一层,其实离答案已经不远了。

调用流程

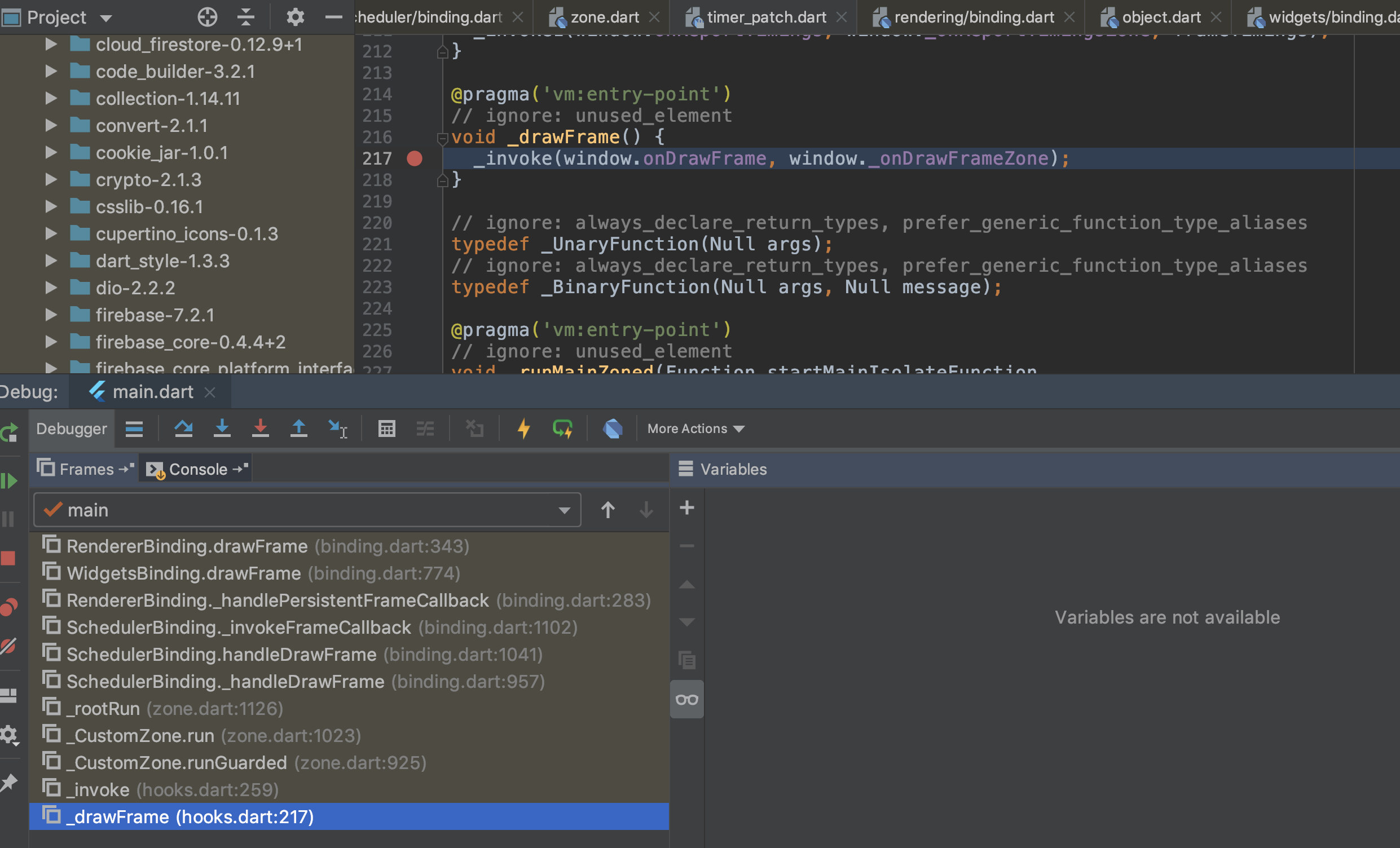

我们一起来看看 postFrameCallback 在被调用前究竟发生了什么?

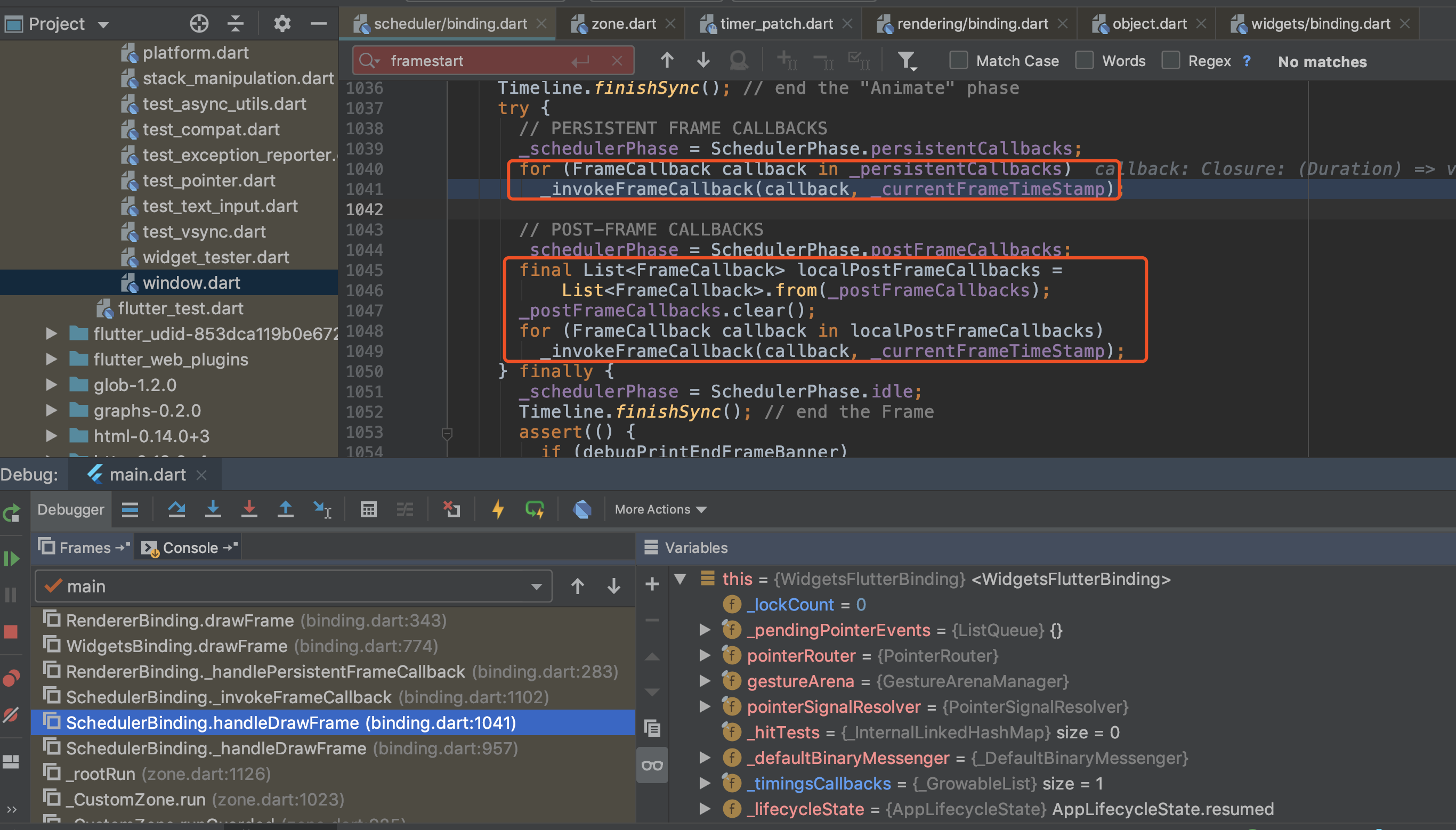

是先遍历执行了 _persistentCallbacks, 其中主角是下面的 drawFrame(),然后再遍历执行 postFrameCallback

1 | ///rendering/binding.dart |

其中由 renderView.compositeFrame();触发, 通过 _window.render 把相关信息 由dart 层 widget ui 数据回传给 engine C++ 层:

1 | void compositeFrame() { |

1 | //Animator.cc |

从这里开始,已经转到 GPU 线程了

1 | ///shell.cc |

1 | ///Rasterizer.cc |

1 | RasterStatus Rasterizer::DoDraw( |

1 | RasterStatus Rasterizer::DrawToSurface(flutter::LayerTree& layer_tree) { |

1 | ///compositor_context.cc |

1 | ///layer_tree.cc |

其中 PlatformViewLayer 继承于 flutter::Layer , 我们聚焦在 PlatformViewLayer

1 | ///Platform_view_layer.cc |

1 | ///FlutterPlatformviews.mm |

然后终于回到这里 setFrame

1 | void FlutterPlatformViewsController::CompositeWithParams(int view_id, |

分析

纵观整个调用流程,其中涉及到 由 UI 线程(dart )切换到 gpu 线程,其中最后的 setFrame 也是在 gpu 线程执行的,而 postFrameCallback 回调是在 dart 层,所以 postFrameCallback 跟 setFrame 并不是在同一个线程,即使按顺序执行下来,setFrame 也不一定比 postFrameCallback 回调执行前先发生。

那 addPostFrameCallback 是不是也就不能解决这个 frame 为 0 的问题了?

反转

我们都知道, dart 层只有一个线程,而在 engine 层,就不一样了:

当 IsIosEmbeddedViewsPreviewEnabled 为 true 时,

platform 跟 gpu 共用一个线程,且为主线程

i/o 操作独用一个线程

Ui 即 dart 层独用另外一个线程;

其他情况,platform、gpu、i/o, ui 各用一个线程

更多 engine 线程 相关的知识,可以参考这里:https://zhuanlan.zhihu.com/p/38026271

1 | ///FlutterEngine.mm |

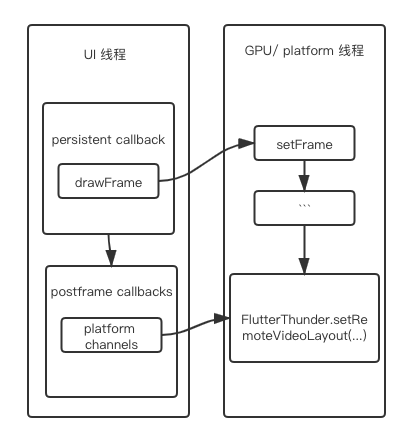

platform 跟 gpu 共用一个线程意味着啥?

意味着,在 addPostFrameCallback 内只要执行的 platform channel 的方法,都可以保证在 setFrame 后再执行

1 | WidgetsBinding.instance.addPostFrameCallback((_) { |

结论

兜了一圈,最终的解决方案就是把 frame 相关的代码调用放在 addPostFrameCallback 里面

1 |

|

最终流程如图所示:

参考:

https://juejin.im/post/5e6b5b11f265da57187c64bd

https://juejin.im/post/5c24acd5f265da6164141236